After more than a year in service, our High Performance hardware is still delivering impressive surprises. Whether you’re on Virtual Servers or Cloud Containers, and whatever software you’re running, the speed boost from migrating from the standard platform to High Performance is incredible.

Take our customers at Sunny Side Up, who saw improvements of 3×-to-7× just from migrating client systems onto High Performance Cloud Containers. Their Project Director Martijn says:

From a sales perspective it’s easy to explain that if we use SiteHost, it’s going to be faster.

It feels good to exceed people’s expectations. And earlier this year we discovered that even inside SiteHost HQ, High Performance hardware is fast enough to raise eyebrows.

In our case we’ve supercharged MySth, our core backend API. As our team handbook puts it, “MySth is the brain behind SiteHost that powers nearly everything”. The faster it runs, the more productive we can be.

As we dive into the results, keep these important facts in mind:

This project was a straight migration with no code optimisations or other performance tweaks. No changes to MySQL or PHP configs either. The results are 100% down to infrastructure.

MySth is the backbone of our business. The fact that we’ve put this system on High Performance hardware tells you everything you need to know about its stability and reliability.

What’s changed?

MySth had been running on older hardware that, while capable, had been in service for a number of years. It’s been replaced with much more modern and speedy tin:

Component | Before | After |

Processor (CPU) | Intel Xeon E5-2630 v3 | AMD Ryzen 9 7950X3D |

Memory | DDR3 RAM @ 1866 MT/s | DDR5 RAM @ 3600 MT/s |

Storage | PCIe Gen 3.0 NVMe and SATA 3 SSDs | High-speed PCIe 4.0 NVMe SSDs |

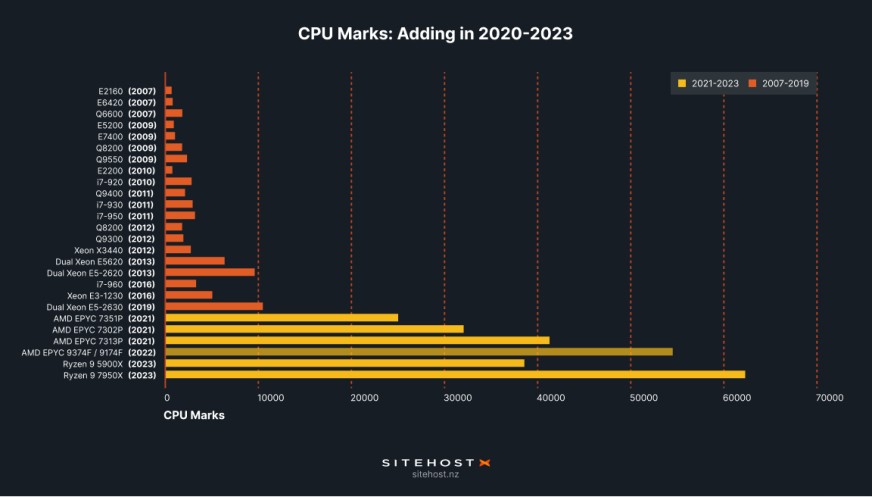

When we blogged about the improvement in CPUs over the years, we reported CPU Marks (a measure of performance) from both processor families in the table above. There's a six-fold difference between them.

Looking at memory, there are 13 years’ worth of innovation packed into DDR5 RAM vs DDR3. Improvements reported in fs.com's comparison include:

Clock rates from 400-1066 MHz to 2400-3600 MHz

Bandwidth from 6400–17066 to 38400–57600 MB/s

At the mid-point of each range, these are increases of over 400%.

Turning to storage, it's harder to put exact numbers on the performance improvements brought on by innovation. But comparing the old and new disks above, the move from SATA to PCIe in particular has dropped latency on every read/write operation. Latency is now around 11.5μs (microseconds, or millionths of a second).

In short, every hardware component brings significant individual improvements. Let’s see how it plays out when they work together.

Not only did we build the High Performance platform, we’re also one of its most satisfied users.

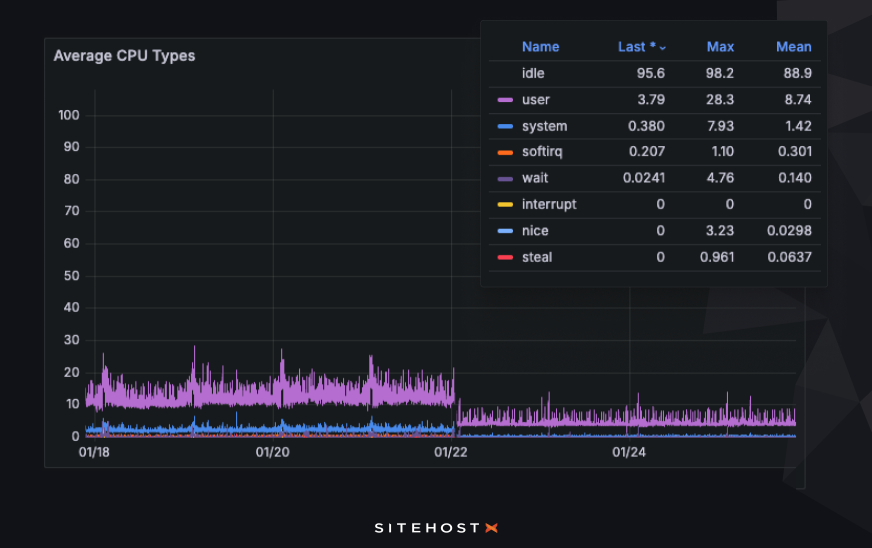

CPU metrics have hit the floor

CPU usage graphs tell us how much of its time the processor spends working. Lower usage shows that the CPU is getting through its work quickly and is less likely to be busy next time a request arrives.

There’s no need to point out where on this graph the migration occurred. In the days leading up to it, the CPU was occupied well over 10% of the time, with daily peaks above 20%. (This shows that the system was already well-resourced, even before the migration. This project wasn't about fixing something; it was more like icing a cake.)

Since then, anything above 10% is out of the ordinary. Arguably the system is over-provisioned but you, and thousands of other customers, can appreciate why we're cautious about these things.

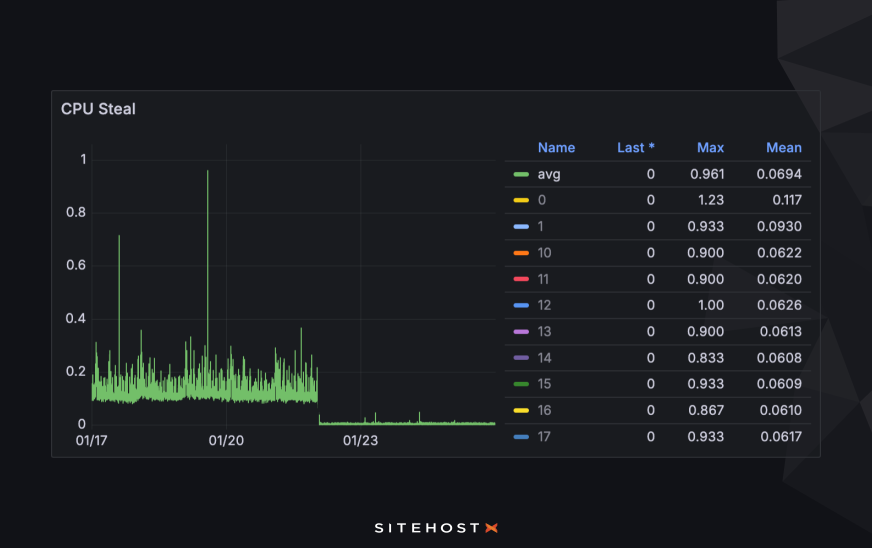

CPU steal, which occurs when a virtual CPU is ready to operate but has to wait on the server's physical CPU to become available, has all but disappeared:

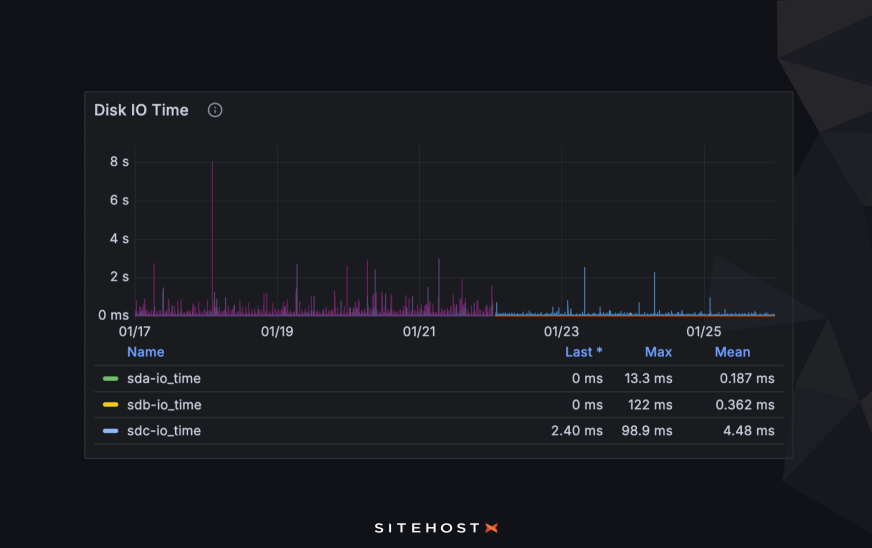

Disk metrics

Every input/output operation (IO) is done while the rest of the system waits for data to be written to, or read from, disk. As you can see, times spent on IO operations were regularly measured in whole seconds before the change. Today the graph has lost almost all its spikes and we're usually looking at tiny fractions of a second.

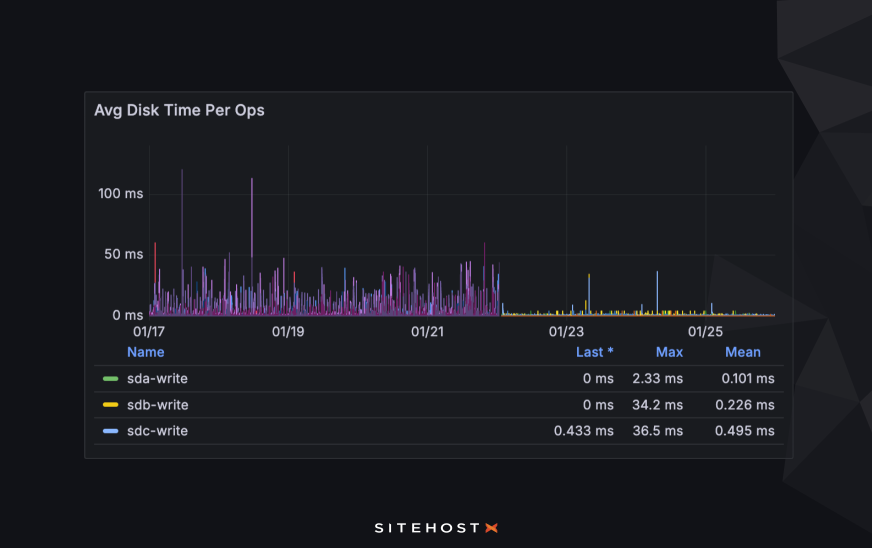

Breaking this down further and looking at each operation, individual jobs are ridiculously fast now. You’re not misreading the “Mean times” column - things really are happening within tenths of a millisecond.

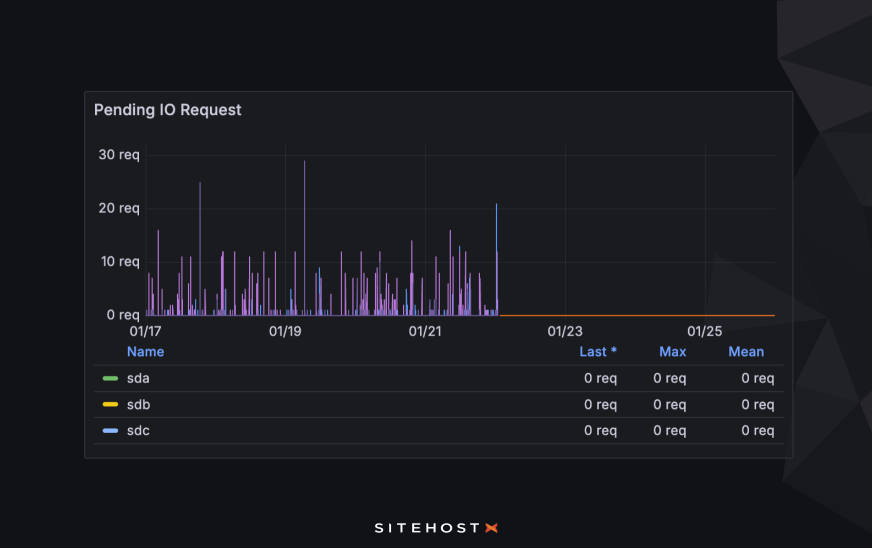

The change in pending IO requests is so good that it’s almost comical. Requests used to queue up but now, quite simply, they don’t. As well as happening faster, everything happens on demand.

Processing jobs through the API

Now let’s look at things from the other side. How much quicker is the API to use?

Public API transactions

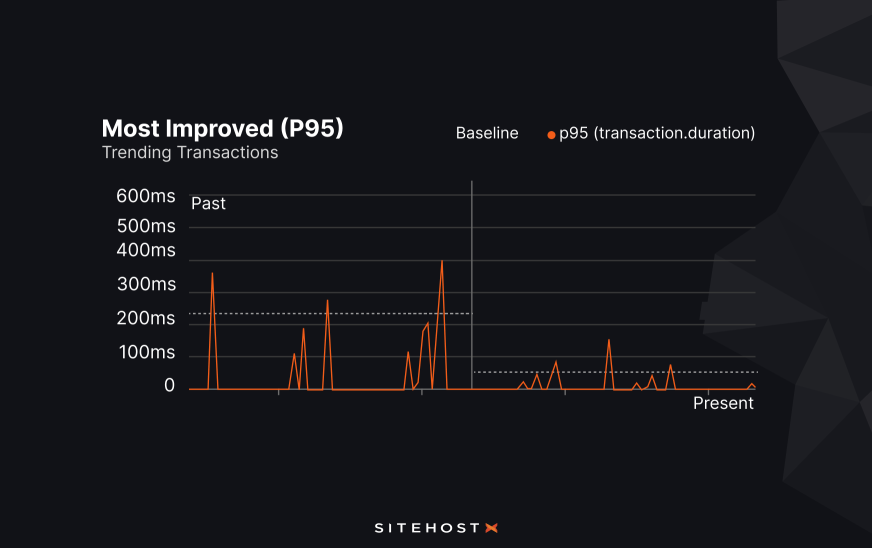

Here’s how much faster public API response times have become, specifically on the /cloud/stack/ path, which is a heavy and thus slower endpoint for us. We’re looking at the 95th percentile (that is, the 95th-slowest transaction out of every 100), which tells us about pain points while excluding outliers.

The dotted lines give the average before and after the migration to High Performance. As you can see, the sort of job that used to take over 300ms now needs less than 100ms. In short, all those improvements in disk and CPU metrics mean that API transactions take much less time.

Admin API transactions

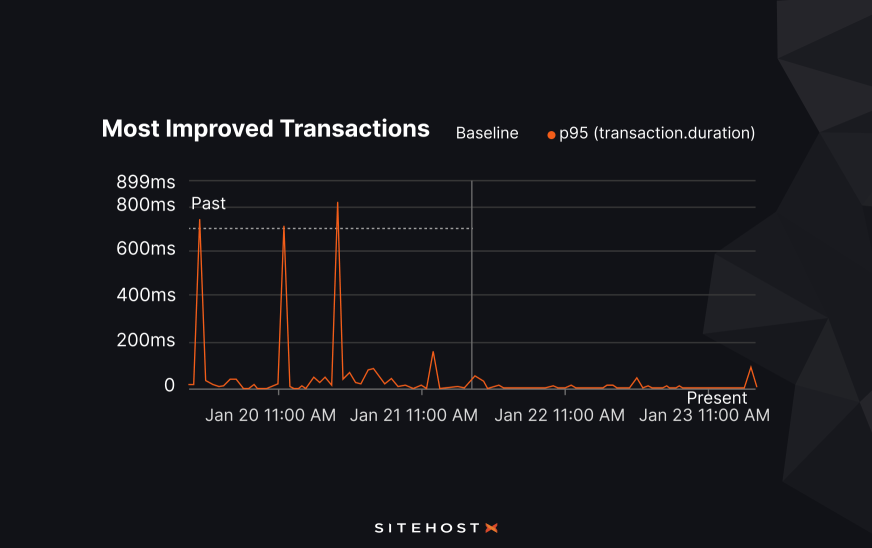

The change in transaction time on the admin API, which we use internally, has seen a much bigger improvement. These transactions are more complex than the public API above. At the 95th percentile, the average time used to be around 700ms. The upgrade dropped that number well below 100ms.

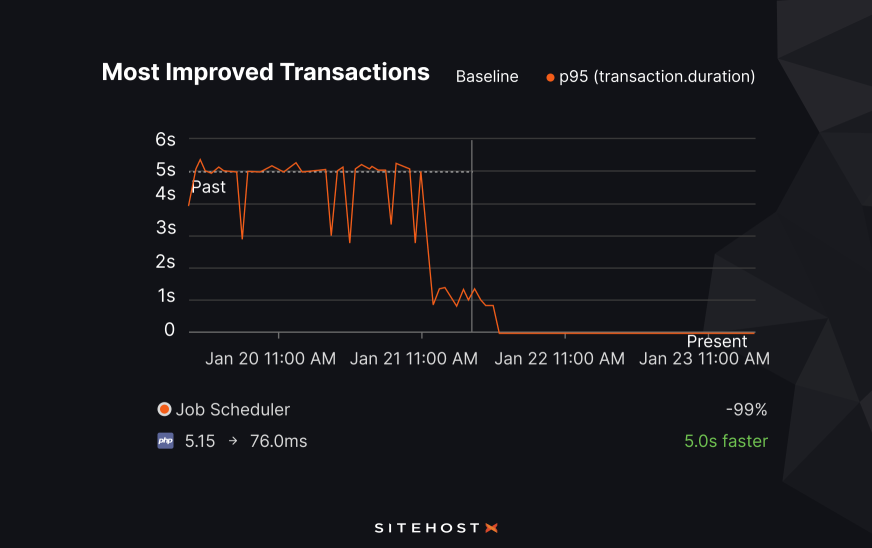

Internal infrastructure jobs

Here’s something tasty to finish: The transactions, or tasks, that have sped up the most. There used to be a bit of a wait for server actions like provisioning a new VM, creating Cloud Containers, or adding SSH users.

Not anymore. The average has dropped from over 5 seconds to a barely-perceptible 76 milliseconds. This is a 99% improvement!

What could a move to High Performance do for you?

The results above show what’s possible just by moving from standard to High Performance hardware. They also confirm what the benchmarking data told us when we launched High Performance: this stuff is fast. It’s worked for Sunny Side Up’s Cloud Containers, and it’s worked for our backend API.

A move onto High Performance hosting is a very cost-effective way to speed up your websites and software. Compare the prices of standard and High Performance Cloud Containers or Virtual Servers to see for yourself that both options are in the same ballpark. You could invest thousands of dollars in code optimisation, DevOps and developer time and never get the same results as you would from a server upgrade. As our Technical Director, Quintin, put it to Website Planet recently: "Chips are cheaper than labour, right?"

Upgrading a server is a quick and easy live process, with no downtime. Today could be the day that you start seeing game-changing performance metrics. Or if there’s anything you want to know first, just ask us. Not only did we build the High Performance platform, we’re also one of its most satisfied users.