The search for optimal performance reaches your database

When database performance is slowing you down take a look at your queries and plugins, and check whether it might be time for an upgrade.

When we've written about performance lately, it's mostly been about High Performance Cloud Containers and the impressive boosts that customers have seen after moving onto that platform. So this is a timely reminder that there's always more than one place to look, or one solution to reach for, when things start slowing down.

Take databases, for example. Our monitoring team has occasionally noticed spikes in server resource usage which didn’t seem to specifically point to a single container or website as the culprit. A closer look at these server's metrics pointed at the database containers instead. So here's a closer look at the various database containers that power all of your Cloud Containers.

A quick tweak that can often help: Profiling queries

Two common ways that queries can affect performance is by being inefficient (i.e. slow), or being long. We recommend profiling queries to find opportunities to speed up or shrink your database queries.

Slow queries

One of the first red flags we look for when troubleshooting database performance are slow queries. These are queries that take a long time to run, often due to poor indexing, inefficient joins, or excessive complexity.

As a first example, let's start with a query that looks correct at a glance:

SELECT

e.employee_id,

e.first_name,

e.last_name,

d.department_name

FROM

employees e

INNER JOIN

departments d ON e.department_id = d.department_id

WHERE

e.employee_id IN (

SELECT employee_id

FROM employees

WHERE salary > 70000

);

This filters employees with salaries greater than $70,000 and joins their department data. However, this query is inefficient because:

- The

WHEREclause creates a temporary in-memory table. - The employees table is scanned twice.

A better version would be:

SELECT

e.employee_id,

e.first_name,

e.last_name,

d.department_name

FROM

employees e

INNER JOIN

departments d ON e.department_id = d.department_id

WHERE

e.salary > 70000;

Now we’re scanning the employees table only once, which avoids unnecessary memory use and extra processing time.

For a more complex case, the inefficiencies in this more advanced query are less obvious:

SELECT

o.order_id,

o.customer_id,

o.order_date,

(

SELECT SUM(oi.unit_price * oi.quantity)

FROM order_items oi

WHERE oi.order_id = o.order_id

) AS order_total

FROM

orders o

WHERE

EXISTS (

SELECT 1

FROM customers c

WHERE c.customer_id = o.customer_id

AND LOWER(c.region) = 'europe'

);

This query looks reasonable and might even be generated by an ORM or AI prompt. But it introduces two key performance issues:

- The subquery in the

SELECTclause is correlated, meaning it runs once per row in the orders table. This can lead to thousands of extra queries for large datasets. - The

LOWER(c.region) = 'europe'condition prevents the use of indexes on region, forcing a full scan of the customers table.

An optimised version of this query would look like this:

SELECT

o.order_id,

o.customer_id,

o.order_date,

SUM(oi.unit_price * oi.quantity) AS order_total

FROM

orders o

JOIN customers c ON o.customer_id = c.customer_id

JOIN order_items oi ON o.order_id = oi.order_id

WHERE

c.region = 'europe'

GROUP BY

o.order_id, o.customer_id, o.order_date;

Key improvements:

- The

SUM()is calculated usingGROUP BY, allowing the engine to optimise aggregation in a set-based way. - The region filter is now index-friendly (sargable), improving speed on large tables.

Caveat: For the index to be useful and for all of the records to be searched, region values should be stored consistently (e.g. all lowercase). This can be enforced on insert or through regular cleanup jobs.

Long queries

In many cases, slow database queries are also very long—either in sheer character length (the current SiteHost record sits in the millions), the number of joined tables, or the number of rows that they attempt to filter. We've seen queries with massive IN( ) clauses, dozens of conditions, or deeply nested SELECT statements — all trying to power a widget, analytics tracker, or auto-suggest feature.

Here is an extremely shortened example of the aforementioned record holder. The original had a character count of 5796207. As well as cutting that down, we've also removed customer data. But you'll still get the idea:

SELECT COUNT(DISTINCT(product.id)) AS count FROM `product` JOIN client_account_product AS cap ON cap.product_id=product.id WHERE 1 AND status = 1 AND hide = 0 AND user_id = '5' AND type = 'product' AND sys = 0 AND (type_variant = 1 OR type_variant = 0) AND ((name LIKE '%Very long string of non unicode characters%') OR EXISTS (SELECT 1 FROM product AS child WHERE child.parent_id

…

OR EXISTS (SELECT 1 FROM product AS child WHERE child.parent_id = product.id AND (child.sku LIKE '%Verys longs strings of non unicodes character%')) OR MATCH(name,sku) AGAINST ('Very long string of nons unicode characters' IN BOOLEAN MODE) OR MATCH(description)

…

OR MATCH(name,sku) AGAINST ('Very long strings of nons unicode characters' IN BOOLEAN MODE) OR MATCH(description) AGAINST ('Very long strings of nons unicode characters' IN BOOLEAN MODE)) AND cap.client_account_id='1' ORDER BY product.id ASC LIMIT 0,99999999

This is simply searching multiple columns of a table for all singular and plural versions of every word in the search string. As well as being an example of an overly long query, the above case can also be abused, which makes things even worse.

Search forms, AJAX filters, and other dynamic content endpoints are often exposed to bots or malicious actors who deliberately or inadvertently expand queries by adding multiple search terms, wildcards, or complex filters. This can drastically increase the strain on your database — even with caching in place.

That’s why we recommend that you implement a few simple precautions on your searches, filters and catalogues where possible:

Limit the number of fields a user can simultaneously search by.

Cap the depth or scope of filters, especially for unauthenticated users. Consider adding CAPTCHA or other mitigations to more advanced searches, or requiring that the user is already logged in.

Restrict the input length of search boxes to prevent overly long strings from being passed to the database. 255 characters are usually more than enough.

By trimming these queries down—removing redundant joins, restricting query inputs, and sometimes even just swapping in lighter alternatives—our customers saw faster page loads and lower database CPU usage.

WordPress and other CMSs: Don’t plug in a performance problem

If you’re running WordPress or other popular CMS platforms, it’s not always as simple as improving database queries in the ways we’ve suggested above. That’s because a lot of slow queries originate from plugins. While plugins add useful features that can substantially improve the user experience, some are notorious for generating bloated SQL that hits multiple tables, using subqueries poorly, or attempting to load far more data than necessary.

Since you don’t have a lot of direct control over the queries that a plugin sends, these are problems that you need to avoid rather than solve.

Our advice here is probably something that you’ve heard before. Every time you select a plugin to add to your CMS, consider the impact it will have on your website’s overall performance. It can be a good idea to run some before-and-after benchmarking tests, for example with PHP Vitals, to see what effect a new plugin has on performance.

When the engine is the bottleneck

Understanding database queries and reducing their impact can improve performance substantially, however sometimes the bottleneck isn’t just the query. Sometimes it’s the database engine itself. That’s where upgrading to something faster, like MariaDB 10.11, makes a measurable difference.

Numbers that speak for themselves

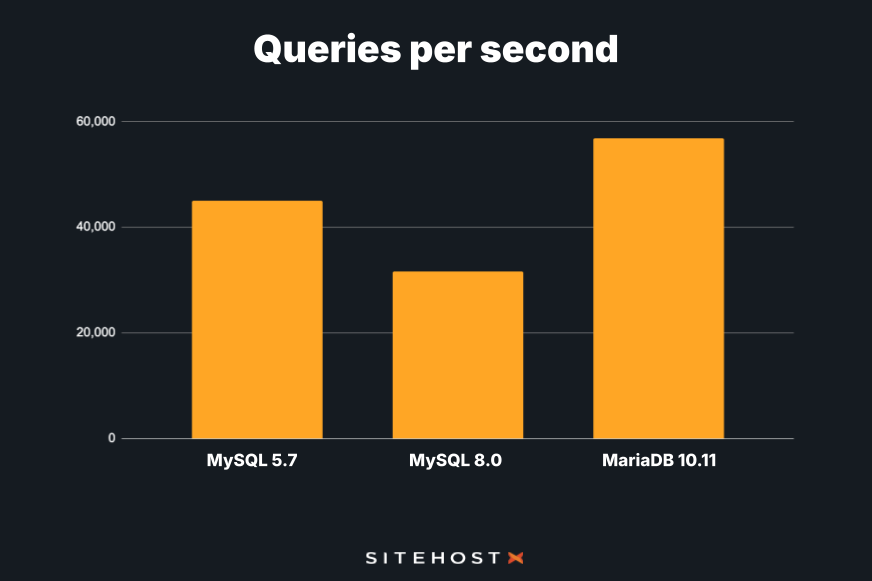

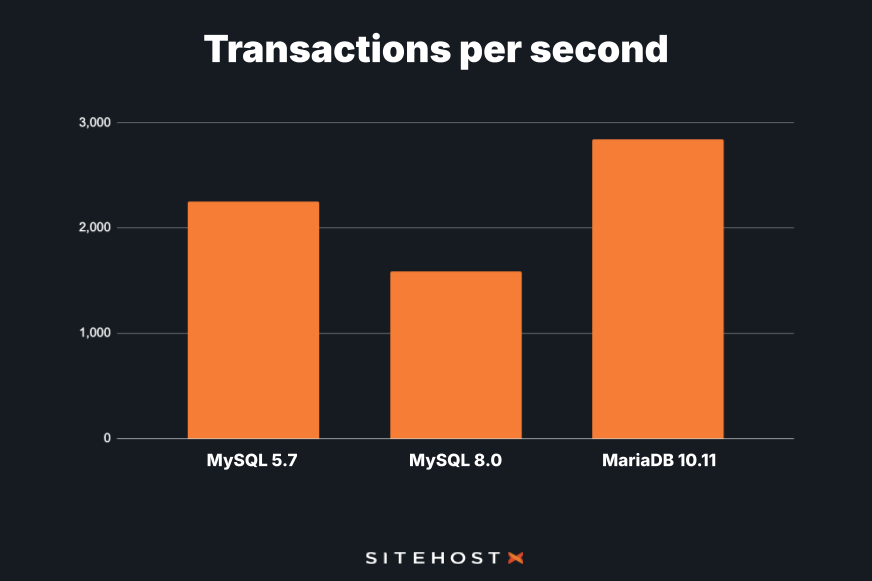

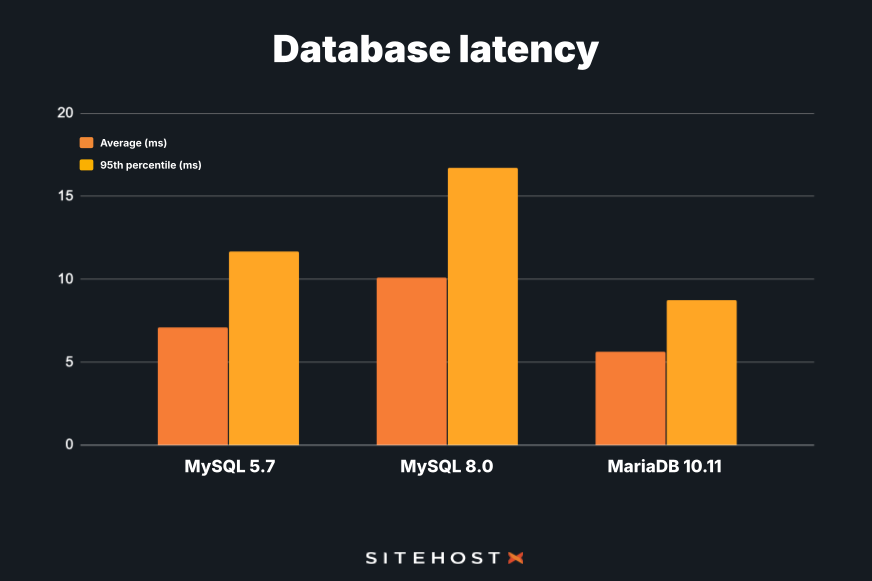

To see the difference we ran a series of controlled benchmarks using Sysbench, simulating a read/write workload with 16 threads for 60 seconds. We tested MySQL 5.7, MySQL 8.0, and MariaDB 10.11, all on the same Cloud Containers Server (High Performance, of course).

The first two graphs show how many queries and transactions each database can handle per second. Higher numbers are better. It’s interesting that MySQL 8.0 is not as quick as MySQL 5.7, but the headline is that MariaDB is the top performer on both metrics.

Latency measures waiting time, or the delay between a request being sent and a response being received. So in this next graph, lower figures are better. Again, MySQL 8.0 is the slowest and MariaDB 10.11 is the quickest.

When we line all of these results up, it’s clear that MariaDB 10.11 is the winner on all counts. So if you’re struggling with a slow MySQL database, another route to quicker performance is a database upgrade.

By the way, if you’re still using MySQL 5.7 then another good reason to move on is that your database reached its end of life (EOL) in October 2023. There have been no upgrades or security patches since then.

Performing your own upgrade to MariaDB on Cloud Containers

Because you can run MySQL and MariaDB containers side-by-side, you have the option to do the migration yourself. All you need is enough spare resources on your server or, if you don’t, you could reach out for a temporary server upgrade to get the migration done (just remember to skip the disk upgrade).

The process is fairly simple, and the Knowledge Base can walk you through most of it.

- Add a new MariaDB 10.11 container (see: Creating a Container).

- Export data from MySQL (see: Connecting to a Database—Database Dumps).

- Create your database and users (see: Managing Databases and Managing Users).

- Import data to MariaDB: The command may vary depending on your database but will likely be something like:

mysql -h mariadb1011 -u USERNAME -p DATABASE < FILENAME.sql - Test the migration: You can either clone your existing container for extended testing or wait until the right time to test the changeover by updating the configuration files and rollback if it didn’t go so well. You’ll want to test your website thoroughly to avoid any surprises, paying special attention to searches and anything that may add data.

Or we can help

If a DIY upgrade isn’t for you, or if you’d like an expert look into database performance issues, that’s what we’re here for. We’ve done this work before, and we can do it again. Our team has helped customers audit query performance, review slow logs, profile live workloads, and switch database engines when it made sense.

Note: Profiling and database tuning are part of our paid support services. If you’re interested, just get in touch with our team and we’ll talk through what’s involved.

Database performance: A quick recap

Shorten your queries where possible — less work means faster results.

Where CMS plugins are involved, take care not to adopt software that taxes database performance too hard.

MariaDB 10.11 is faster than both MySQL 5.7 and 8.0 on important benchmarks.

Cloud Containers have all the tools you need to make your own move from MySQL to MariaDB.

We’re happy to help — especially if your current database feels like it’s holding you back.

Ready to move to MariaDB or want us to do some profiling for you? Let’s chat.