Website speed: Know where the time goes with Xdebug on Cloud Containers

On Cloud Containers, it's easy to break down every millisecond of web page load time with PHP extension Xdebug.

This week we've added a new Knowledge Base article, Profiling a site using Xdebug on Cloud Containers. If you have a PHP-based website that's running slowly, profiling its performance is a crucial part of the optimisation process. Data from Xdebug tells you how long each function takes as a webpage loads, so you know where to focus your efforts to speed things up.

Xdebug is a PHP extension that's really easy to use on Cloud Containers.

How slow is too slow? When does a site need profiling?

Profiling your site only makes sense when you know that there's a performance issue to find and address. We recommend using the time to first byte (TTFB) as a measuring stick. That's because TTFB is important, easy to understand, and easy to find.

To find your TTFB value, use this simple curl request:

curl -o /dev/null -H 'Cache-Control: no-cache' -s -w "Connect: %{time_connect} TTFB: %{time_starttransfer} Total time: %{time_total} \n" https://example.com

Google's web.dev site calls TTFB "a foundational metric" and recommends that you aim for less than 0.8 seconds. In reality, we see anything more than 0.5 seconds as too slow. As a rule of thumb that's when to look for optimisation opportunities.

Optimising site speed is an activity that can happen all throughout your tech stack. Up the top, CMS plugins and over-sized images are worth paying attention to. At the very bottom, faster hardware can make a big difference. Somewhere in the middle we encounter the code that Xdebug can tell you all about.

Why Xdebug?

Xdebug is a powerful PHP extension designed for developers who are debugging and profiling their PHP code. You can use it to track and analyse variables, function calls, and script execution flow. By identifying bugs and optimising performance, you add to the overall quality of your code.

The most valuable uses of Xdebug include:

Debugging intricate code logic.

Fine-tuning your code's performance to make it faster and more efficient.

Identifying performance bottlenecks.

Gaining deep insights into code execution during development.

The insights that you'll gain include:

Detailed breakdown of function calls and variable values.

Monitoring execution time and script performance.

Xdebug and Webgrind work as a team

To get set up and ready to analyse data involves three main components:

Xdebug itself.

Cachegrind: Profile data for analysis.

Webgrind: A user-friendly interface for visualising and interpreting Cachegrind reports.

If you've already opened Profiling a site using Xdebug on Cloud Containers you'll have seen how to enable Xdebug and start collecting data. As it runs, Xdedug creates cachegrind files every time you load a webpage. This is resource-intensive, so it's best to profile live websites in quiet times. Any other visitors might notice that the site is less responsive than usual.

Viewing and interpreting results

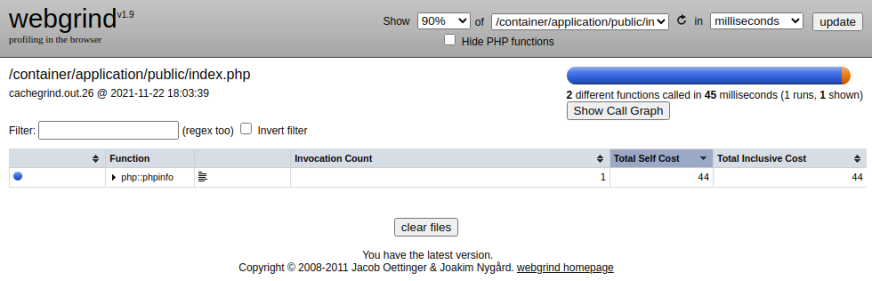

If you've followed the Knowledge Base article, your Webgrind interface will be available on example.com/webgrind. Here, you'll see something like this:

You can switch the units between milliseconds and percentage of the total time. It's also useful to sort results by execution time, so you can see which functions take longest.

Obviously you’d expect some functions to take longer than others, so you need to use some judgement here. You get the same boost from halving a 0.02s time as you do from improving a 0.1s time by 10%.

Where functions look like they're taking too long to execute, there can be different underlying causes. A few possibilities to look for:

Inefficient code which you can fix yourself.

Code that belongs to a CMS plugin and is causing an incompatibility.

Nested loops that can be optimised.

Overly complex algorithms.

Function calls: Reducing overhead

Webgrind shows you the frequency and overhead of function calls. Either frequent calls or a high overhead can be a signal that a function is significantly impacting on performance. To give one example, if a function performs a database query multiple times within a loop that that could be slowing your application down.

Also look for functions that frequently call external resources like an image hosted on a remote server. Any latency in that connection will add to your overhead every time. It's also a risk to create a dependence on the availability of an external server.

By identifying functions to optimise, either directly or by reducing the number of calls, you'll improve your site speed. Possible actions to take include caching the results of expensive operations, refactoring your code to reduce redundancy, or moving resources onto your server.

Keep profiling and keep learning

That's the end of this quick tour of Xdebug. It's a useful profiling tool for uncovering hard-to-find performance bottlenecks, and it gives you invaluable insight into the way your code actually behaves. It lets you be more systematic as you analyse execution time, function calls, and call stack traces. Coupled with Webgrind, it puts a lot of useful data in front of you.

But you're never done with optimisation. Improving website performance is a continuous process. The more you know at each level of your tech stack, the better. And, as always, if you ever have an issue that looks like it's on the hosting and infrastructure side, we're here to help.

Photo by Anton Makarenko on Pexels