Improving the performance of your Laravel queues

While investigating slow servers, we discovered some common performance issues with Laravel queues. Here’s how to speed things up for your sites.

As a hosting company, we are always looking for ways we can improve our performance and stability. It can be easy for us to only focus on how we can optimise our infrastructure, but we can't forget that the applications we host can also have a large impact on their own performance.

Tracking recent performance metrics, we found a number of websites running the Laravel framework start to “top the charts” when it comes to sheer resource consumption. Seeing this pattern we dug a little deeper to find - and fix - common causes.

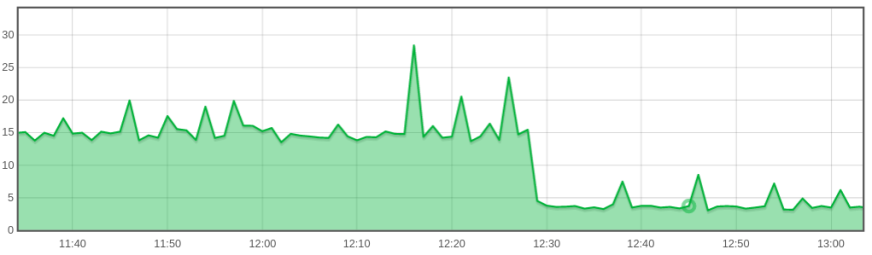

We've identified a few simple changes that can have a big effect on your server's load and CPU usage. How much of an effect? Here's what happened to one customer server with these changes:

Could your Cloud Container servers get similar gains? Let's find out.

It's all about queues

Most web applications today will involve some long-running activities, such as processing orders or sending emails. In most cases you don't want the end user to wait for these to complete, so you spin them off asynchronously. In Laravel, this is handled through queues. When a task comes in, it is added to the queue and (usually) handled by a separate process. This design ensures that the task is completed and frees up the user to continue browsing your site.

The issues we found with various Laravel sites boiled down to how these queues were set up. In some cases this led to a few worker processes using a large share of server resources, or too many worker processes being spun up to handle a relatively small workload. Either way, the result was that the server was overworked. This resulted in slower performance and in some cases downtime for the website, and other sites hosted on the same machine.

Here’s what we found, and the changes we made to speed things up. If your sites are queuing jobs in Laravel, there might be some useful tips ahead.

Running Queues With Supervisord

In the Laravel documentation on running queue workers, the suggested method to have the worker run in the background is to use Supervisord. This is essentially a program that manages other processes, and it’s what we currently use in Cloud Container web images. Laravel even has a section on configuring supervisord.

Making a small change from 'listen' to 'work' had an immediate effect on websites which had been struggling.

Several sites we investigated made use of this feature and had several queue workers running asynchronously in the background. What we noticed, though, was they tended to prefer the use of the artisan queue:listen command instead of artisan queue:work. The advantage of using listen is that it will automatically pick up any code or application state changes made after the worker is spun up. This can be good while you’re developing an application, however due to the constant CPU usage this comes with a large performance hit and it is not as suitable for a production environment.

Using the work command on the other hand will not detect code or state changes. All this means is you need to reload the queue workers after making changes to your site, which can be done simply using the artisan queue:restart command. The upside though, is starting the queue this way uses far less CPU on average, and is highly recommended for any production environment.

Making this small change from listen to work had an immediate effect on websites which had been struggling. The resource usage on the host server came down drastically and most websites saw a large bump in their general performance. We also found there are many other options you can enable to help reduce the impact of running many background queue processes.

Here is the command that was used to start up a queue worker before we made our changes:

php /container/application/artisan queue:listen --queue=defaultWe then changed it to look more like this:

nice -n 10 php /container/application/artisan queue:work --queue=default --max-jobs=1000 --max-time=3600 --rest=0.4 --sleep=5Even besides the move to work, there are quite a lot of differences there. Luckily these mostly boil down to the same idea - reducing the amount of CPU being consumed.

We started with setting the

nicevalue a little lower to assign less priority to the command, allowing other processes to use the CPU if required.Next, we set the

--max-jobsand--max-timeflags. These are not necessary, they just force the worker to restart after a certain amount of jobs or time elapses, which helps keep on top of things such as memory leaks.The

--restflag specifies a time to wait between each job, and is used to just slow things down a bit and let other processes get some CPU time. It is such a small value you will likely not notice, though feel free to adjust as needed.Finally, we included a

--sleepflag, which tells the worker to take a break for a few seconds if there are no tasks to perform. Setting this reduces the amount of work being performed by the worker, and ensures it’s not always running when there is nothing to perform.

One final note. When using the artisan queue:work command on our standard Cloud Container web images, you will need to enable the pcntl_signal and pcntl_alarm functions. To do this, navigate to the /container/application/php directory and edit the php.ini file. You will then want to look for the disable_functions variable, which will contain a comma separated list of functions that are disabled in PHP. Find the pcntl_signal and pcntl_alarm functions and remove them from this variable, then restart your container to apply these changes.

Running Queues with CRON

The second method we saw websites running their Laravel workers was through the CRON daemon. The first issue we found with this was that it was often used in conjunction with Supervisord. This then means more processes are being spun up than is necessary, which will again hinder the performance of the site. Choosing one method or the other is generally the better way to go. For our Cloud Containers we generally recommend going with Supervisord, as it gives you more control over the process. That said, if you are more comfortable with CRON this can work just as well.

The biggest issue we found with the CRON method was controlling the number of running workers. A simple way to limit this is through the flock tool, which can be used to limit the number of processes running based on a file lock. One such entry may look like this:

*/5 * * * * flock -n /container/application/worker-cron.lock nice -n 10 php /container/application/artisan queue:work --queue=default --rest=0.4 --stop-when-emptyYou will notice this contains much of the same logic as in the Supervisord section above, though instead of max jobs/time and sleep we make use of the --stop-when-empty flag. As it sounds, this will tell the worker to stop processing tasks when the queue is empty, finishing up the process. The worker will pick up again once the next CRON invocation comes round and there are tasks in the queue.

The best settings can change for every site

We hope these suggestions can help improve your processing of the Laravel queue, though keep in mind that they are just suggestions. These exact settings will not work for everyone, even if we all run the same artisan version. Every site is different and will require some tweaking to get right.

If you have the option, we highly recommend going with Supervisord on our Cloud Containers. This gives you a much more predictable performance, and more control. As always, if you have any questions or concerns feel free to reach out to us!